Microsoft Adds MIDI 2.0, Researches AI Text-to-MIDI in 2023

The MIDI Association has enjoyed an ongoing partnership with Microsoft, collaborating to ensure that MIDI software and hardware play nicely with the Windows operating system. All of the major operating systems companies are represented equally in the MIDI Association, and participate in standards development, best practices, and more to help ensure the user experience is great for everyone.

As an AI music generator enthusiast, I’ve taken a keen interest in Microsoft Research (MSR) and their machine learning music branch, where experiments about music understanding and generation have been ongoing.

It’s important to note that this Microsoft Research team is based in Asia and enjoys the freedom to experiment without being bound to the product roadmaps of other divisions of Microsoft. That’s something unique to MSR, and gives them incredible flexibility to try almost anything. This means that their MIDI generation experiments are not necessarily an indication of Microsoft’s intention to compete in that space commercially.

That being said, Microsoft has integrated work from their research team in the past, adding derived features to Office, Windows, and more, so it’s not out of the question that these AI MIDI generation efforts might some day find their way into a Windows application, or they may simply remain a fun and interesting diversion for others to experiment with and learn from.

The Microsoft AI Music research team, operating under the name Muzic, started publishing papers in 2020 and have shared over fourteen projects since then. You can find their Github repository here.

The majority of Muzic’s machine learning efforts have been based on understanding and generating MIDI music, setting them apart from text-to-music audio generation services like Google’s MusicLM, Meta’s MusicGen, and OpenAI’s Jukebox.

On May 31st, Muzic published a research paper on their first ever text-to-midi application, MuseCoco. Trained on a reported 947,659 Standard MIDI files (a file format which includes MIDI performance information) across six open source datasets, developers found that it significantly outperformed the music generation capabilities of GPT-4 (source).

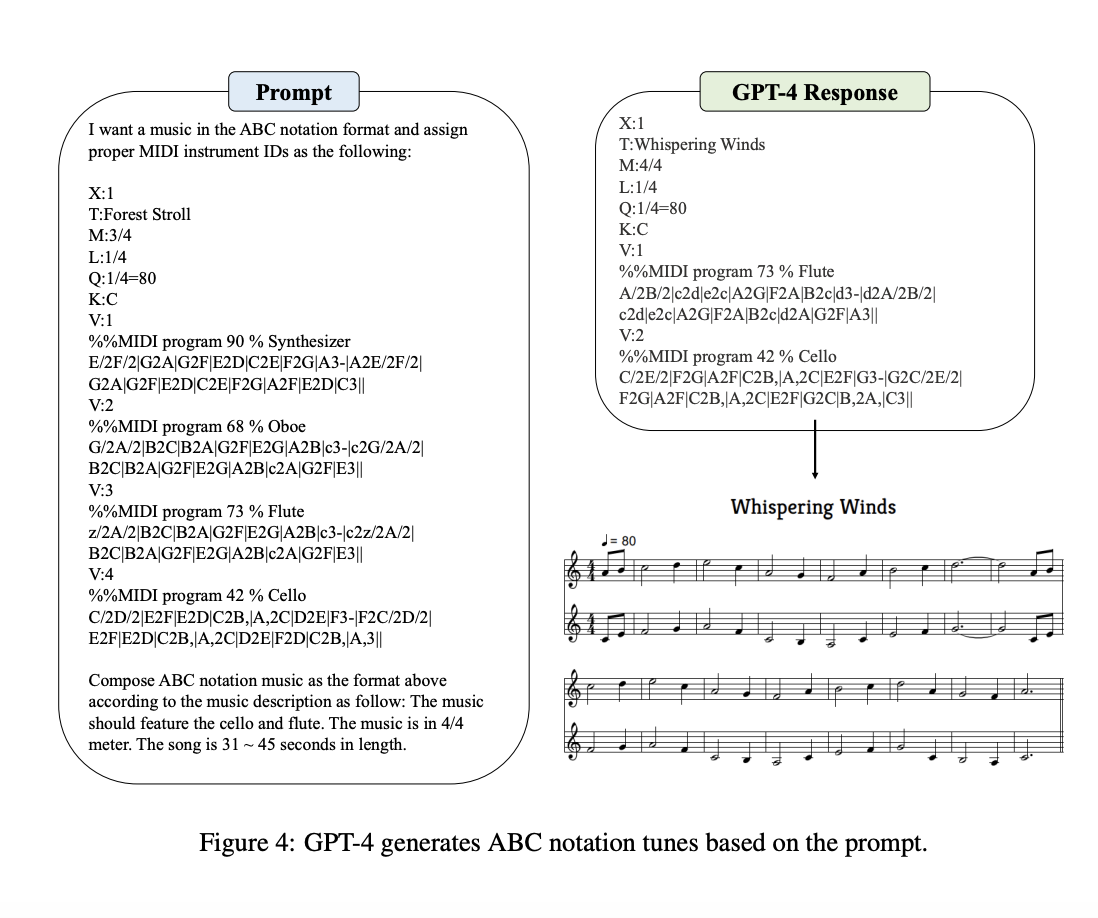

It makes sense that MuseCoco would outperform GPT-4, having trained specifically on musical attributes in a large MIDI training dataset. Details of the GPT-4 prompt techniques were included on figure 4 of the MuseCoco article, shown below. The developers requested output in ABC notation, a shorthand form of musical notation for computers.

I have published my own experiments with GPT-4 music generation, including code snippets that produce MIDI compositions and will save the MIDI files locally using JS Node with the MidiWriter library. I also shared some thoughts about AutoGPT music generation, to explore how AI agents might self-correct and expand upon the short duration of GPT-4 MIDI output.

Readers who don’t have experience with programming can still explore MIDI generation with GPT-4 through a browser DAW called WavTool. The application includes a chatbot who understands basic instructions about MIDI and can translate text commands into MIDI data within the DAW. I speak regularly with their founder Sam Watkinson, and within the next months we anticipate some big improvements.

Unlike WavTool, there is currently no user interface for MuseCoco. As is common with research projects, users clone the repository locally and then use bash commands in the terminal to generate MIDI data. This can be done either on a dedicated Linux install, or on Windows through the Windows Subsystem for Linux (WSL). There are no publicly available videos of the service in action and no repository of MIDI output to review.

You can explore a non-technical summary of the full collection of Muzic research papers to learn more about their efforts to train machine learning models on MIDI data.

Although non-musicians often associate MIDI with .mid files, MIDI is much larger than just the Standard MIDI File format. It was originally designed as a way to communicate between two synthesizers from different manufacturers, with no computer involved. Musicians tend to use MIDI extensively for controlling and synchronizing everything from synthesizers, sequencers, lighting, and even drones. It is one of the few standards which has stood the test of time.

Today, there are different toolkits and APIs, USB, Bluetooth, and Networking transports, and the new MIDI 2.0 standard which expands upon what MIDI 1.0 has evolved to do since its introduction in 1983.

MIDI 2.0 updates for Windows in 2023

While conducting research for this article, I discovered the Windows music dev blog where it just so happens that the Chair of the Executive Board of the MIDI Association, Pete Brown, shares ongoing updates about Microsoft’s MIDI and music efforts. He is a Principal Software Engineer in Windows at Microsoft and is also the lead of the MIDI 2.0-focused Windows MIDI Services project.

I reached out to Pete directly and was able to glean the following insights.

Q: I understand Microsoft is working on MIDI updates for Windows. Can you share more information?

A: Thanks. Yes, we’re completely revamping the MIDI stack in Windows to support MIDI 2.0, but also add needed features to MIDI 1.0. It will ship with Windows, but we’ve taken a different approach this time, and it is all open source so other developers can watch the progress, submit pull requests, feature requests, and more. We’ve partnered with AMEI (the Japan equivalent of the MIDI Association) and AmeNote on the USB driver work. Our milestones and major features are all visible on our GitHub repo and the related GitHub project.

Q: What is exciting about MIDI 2.0?

A: There is a lot in MIDI 2.0 including new messages, profiles and properties, better discovery, etc., but let me zero in on one thing: MIDI 2.0 builds on the work many have done to extend MIDI for greater articulation over the past 40 years, extends it, and cleans it up, making it more easily used by applications, and with higher resolution and fidelity. Notes can have individual articulation and absolute pitch, control changes are no longer limited to 128 values (0-127), speed is no longer capped at the 1983 serial 31,250bps, and we’re no longer working with a stream of bytes, but instead with a packet format (the Universal MIDI Packet or UMP) that translates much better to other transports like network and BLE. It does all this while also making it easy for developers to migrate their MIDI 1.0 code, because the same MIDI 1.0 messages are still supported in the new UMP format.

At NAMM, the MIDI Association showcased a piano with the plugin software running in Logic under macOS. Musicians who came by and tried it out (the first public demonstration of MIDI 2.0, I should add) were amazed by how much finer the articulation was, and how enjoyable it was to play.

Q: When will this be out for customers?

A: At NAMM 2023, we (Microsoft) had a very early version of the USB MIDI 2.0 driver out on the show floor in the MIDI Association booth, demonstrating connectivity to MIDI 2.0 devices. We have hardware and software developers previewing bits today, with some official developer releases coming later this summer and fall. The first version of Windows MIDI Services for musicians will be out at the end of the year. That release will focus on the basics of MIDI 2.0. We’ll follow on with updates throughout 2024.

Q: What happens to all the MIDI 1.0 devices?

A: Microsoft, Apple, Linux (ALSA Project), and Google are all working together in the MIDI association to ensure that the adoption of MIDI 2.0 is as easy as possible for application and hardware developers, and musicians on our respective operating systems. Part of that is ensuring that MIDI 1.0 devices work seamlessly in this new MIDI 2.0 world.

On Windows, for the first release, class-compliant MIDI 1.0 devices will be visible to users of the new API and seamlessly integrated into that flow. After the first release is out and we’re satisfied with performance and stability, we’ll repoint the WinMM and WinRT MIDI 1.0 APIs (the APIs most apps use today) to the new service so they have access to the MIDI 2.0 devices in a MIDI 1.0 capacity, and also benefit from the multi-client features, virtual transports, and more. They won’t get MIDI 2.0 features like the additional resolution, but they will be up-leveled a bit, without breaking compatibility. When the MIDI Association members defined the MIDI 2.0 specification, we included rules for translating MIDI 2.0 protocol messages to and from MIDI 1.0 protocol messages, to ensure this works cleanly and preserves compatibility.

Over time, we’d expect new application development to use the new APIs to take advantage of all the new features in MIDI 2.0.

Q: How can I learn more?

A: Visit https://aka.ms/midirepo for the Windows MIDI Services GitHub repo, Discord link, GitHib project backlog, and more. You can also follow along on my MIDI and Music Developer blog at https://devblogs.microsoft.com/windows-music-dev/ . To learn more about MIDI 2.0, visit https://midi.org .

If you enjoyed this article and want to explore similar content on music production, check out AudioCipher’s reports on AI Bandmates, Sonic Branding, Sample managers and the latest AI Drum VSTs.