Jordan Rudess and MIT’s jam_bot: A New Era of Human-AI Musical Collaboration

Imagining our augmented now and the future through the lens of AI art.

The artwork above is generated by Mostafa ‘Neo’ Mohsenvand of the MIT Media Lab, using AI tools and manual editing and drawing.

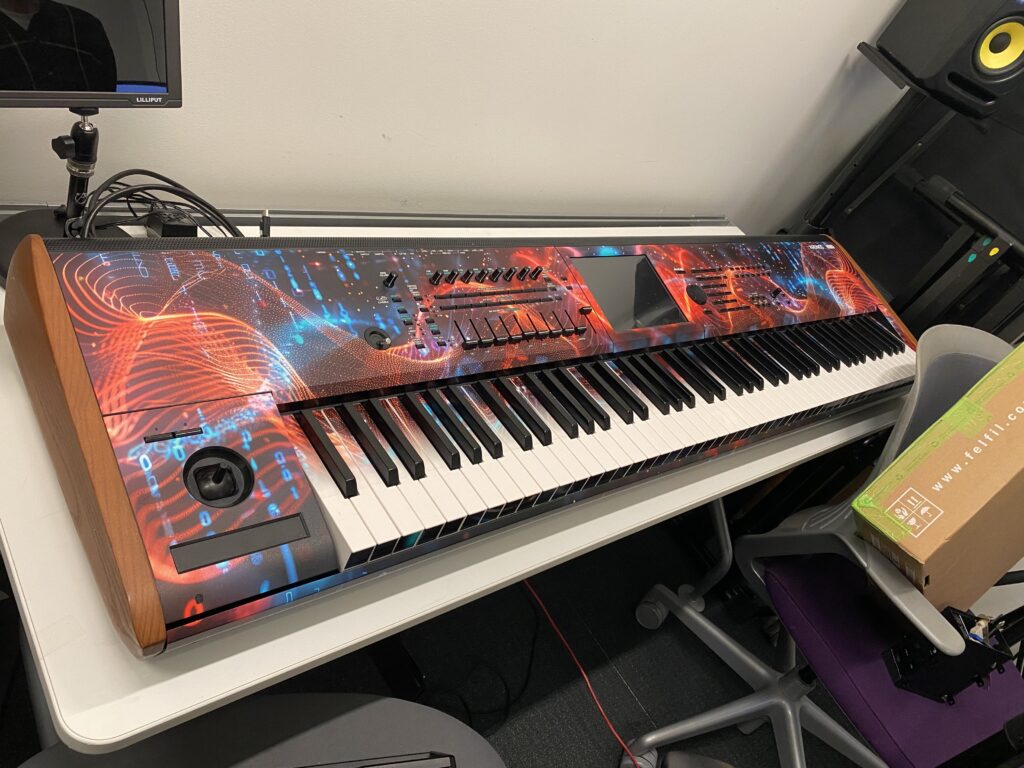

In September 2024, the MIT Media Lab staged a one-of-a-kind performance that blended human virtuosity with machine intelligence. Featuring Jordan Rudess (keyboardist of Dream Theater), violinist Camilla Bäckman, and an artificial intelligence system called jam_bot, the concert marked a major milestone in live AI-assisted improvisation.

The performance wasn’t just experimental — it was immersive. A kinetic sculpture suspended over the stage danced to the rhythms of jam_bot’s contributions, creating a multi-sensory dialogue between light, movement, and sound. The event highlighted a bold new future in which machines become collaborative partners in musical expression, and MIDI remains the essential glue that binds it all together.

The Technology Behind jam_bot

jam_bot was developed by the Responsive Environments Group at MIT’s Media Lab, led by Professor Joseph Paradiso. Graduate researchers Lancelot Blanchard and Perry Naseck trained the AI model to listen, respond, and even improvise with live musicians in real time.

At the heart of the system is a neural network trained on a vast dataset of MIDI and audio recordings. During performance, jam_bot uses live audio input and MIDI messages from Rudess’s and Bäckman’s instruments to make decisions about when and how to join the jam.

Its musical responses are transmitted via MIDI back to the synthesizers and kinetic sculpture controllers, creating a real-time feedback loop between performer and machine.

“We didn’t want it to just play along — we wanted it to have a personality,” said Naseck. The team explored how AI could exhibit *expressiveness*, not just accuracy, and the result was a surprisingly intuitive duet partner.

Last spring, Rudess became a visiting artist with the MIT Center for Art, Science and Technology (CAST), collaborating with the MIT Media Lab’s Responsive Environments research group on the creation of new AI-powered music technology.

Rudess’ main collaborators in the enterprise are Media Lab graduate students Lancelot Blanchard, who researches musical applications of generative AI (informed by his own studies in classical piano), and Perry Naseck, an artist and engineer specializing in interactive, kinetic, light- and time-based media. Overseeing the project is Professor Joseph Paradiso, head of the Responsive Environments group and a longtime Rudess fan.

Paradiso arrived at the Media Lab in 1994 with a CV in physics and engineering and a sideline designing and building synthesizers to explore his avant-garde musical tastes. His group has a tradition of investigating musical frontiers through novel user interfaces, sensor networks, and unconventional datasets. The researchers set out to develop a machine learning model channeling Rudess’ distinctive musical style and technique.

In a paper published online by MIT Press in September, co-authored with MIT music technology professor Eran Egozy, they articulate their vision for what they call “symbiotic virtuosity:” for human and computer to duet in real-time, learning from each duet they perform together, and making performance-worthy new music in front of a live audience.

https://www.media.mit.edu/articles/a-model-of-virtuosity

Symbiotic Virtuosity: Musicians React

For Jordan Rudess, whose career has embraced everything from prog metal to app design, playing with jam_bot was more than a novelty — it was a glimpse into the future. “It was like having a creative partner with a mind of its own,” Rudess said. “It responded in ways that surprised me — sometimes gentle, sometimes chaotic — but always musical.”

Camilla Bäckman, an acclaimed Finnish violinist known for her genre-spanning performances and expressive stage presence, echoed the sentiment: “I felt like I was in a conversation, not just a performance. jam_bot would throw out a phrase and I’d reply. It wasn’t just reacting — it was listening.”

Aligning with the MIDI Innovation Awards

The MIDI Innovation Awards, a joint initiative of The MIDI Association, NAMM and Music Hackspace, celebrate groundbreaking work in music technology. jam_bot embodies this spirit — combining MIDI’s versatility with machine learning to explore new realms of live performance, composition, and collaboration.

By building on MIDI’s robust communication protocols, jam_bot was able to “listen” and “respond” to live musicians with millisecond precision. MIDI not only carried the performance data but helped structure the AI’s output to stay musically relevant, from harmony to rhythm. This technical backbone made the performance feel less like code and more like chemistry.

Meet the Artists

🎹 Jordan Rudess

Jordan Rudess is widely recognized as one of the greatest keyboardists in progressive rock. Best known for his role in Dream Theater, he has also released solo albums, developed music apps like GeoShred, and championed new musical technologies. Rudess frequently collaborates with developers and researchers to push the limits of what keyboards — and keyboardists — can do.

🎻 Camilla Bäckman

Camilla Bäckman is a classically trained violinist from Finland whose performance career spans folk, metal, and electronic music. In addition to her rich solo work, she is known for experimenting with loop pedals, voice, and live FX. Bäckman’s technical precision and expressive flair make her an ideal performer for boundary-pushing collaborations like jam_bot.

Looking Forward: The Next Movement in AI + MIDI

What makes jam_bot especially exciting is that it doesn’t replace the artist — it enhances them. By serving as an improvisational partner, AI becomes a source of inspiration, pushing performers in unexpected directions and redefining live music as a dynamic co-creation.

This project exemplifies the future MIDI aims to support: open, inclusive, and creatively adventurous.

It also serves as a call to developers and performers alike — to embrace not only new tools, but new relationships with the technology itself.

As Rudess put it, “It’s not about AI taking over — it’s about discovering what we can do together.”

The MIDI Association Visits The MIT Media Lab In April 2025

After the weekend at the 2025 Berklee Able Assembly, on Monday, April 14, 2025, we visited The MIT Media Lab.

Graduate researchers Lancelot Blanchard and Perry Naseck who worked on the Jordan Rudess project gave us a tour of the amazing MIT Media Lab facility.

The MIT Media Lab Building in Cambridge, Mass on the banks of the Charles River

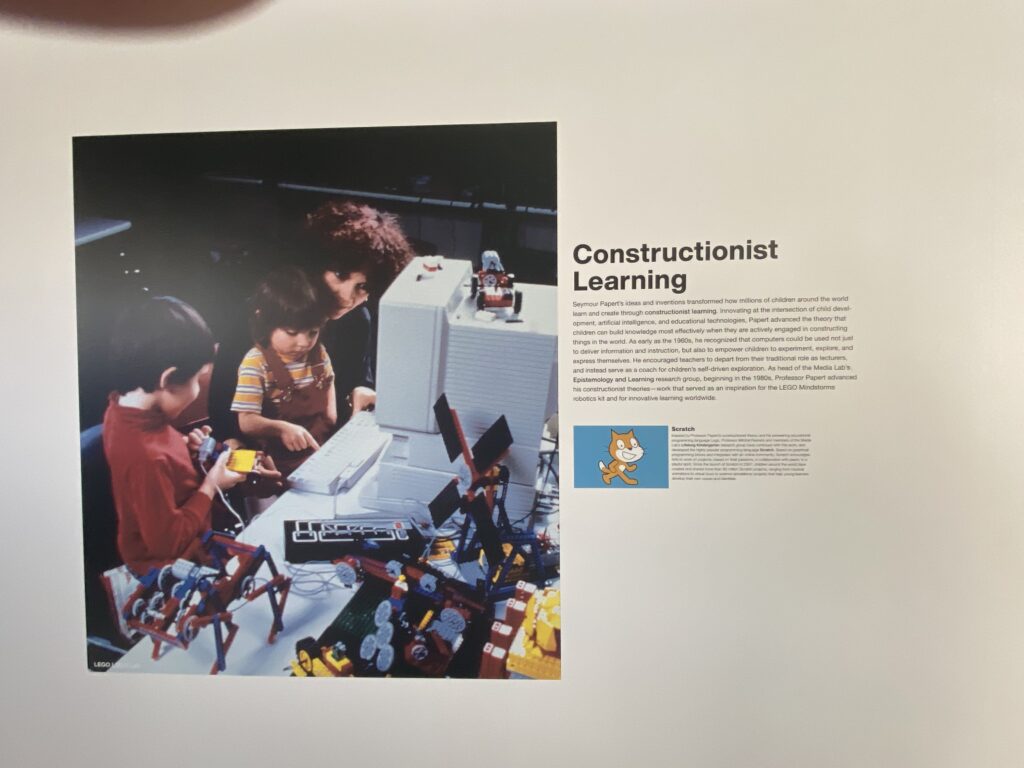

There is a reason for the Lego model of the buildings. MIT Media Lab did a lot of work with Lego on both the Mindstorm Robots for Kids and the Scratch, a programming language for kids. Check out the poster below on Constructionist Learning.

The MIT Media Lab Mission To The Moon

It is installed in the MIT Media Lab ground-floor gallery, and is open to the public as part of Artfinity, MIT’s Festival for the Arts.

The installation allows visitors to interact with the software used for the mission in virtual reality.

Learn more about Luna from the MIT Morningside Academy for Design (MIT MAD)

MIT Media Lab

Here is a picture from the first floor of the MIT Media Lab of a wall of old TVs in different condition and showing content on every screen.

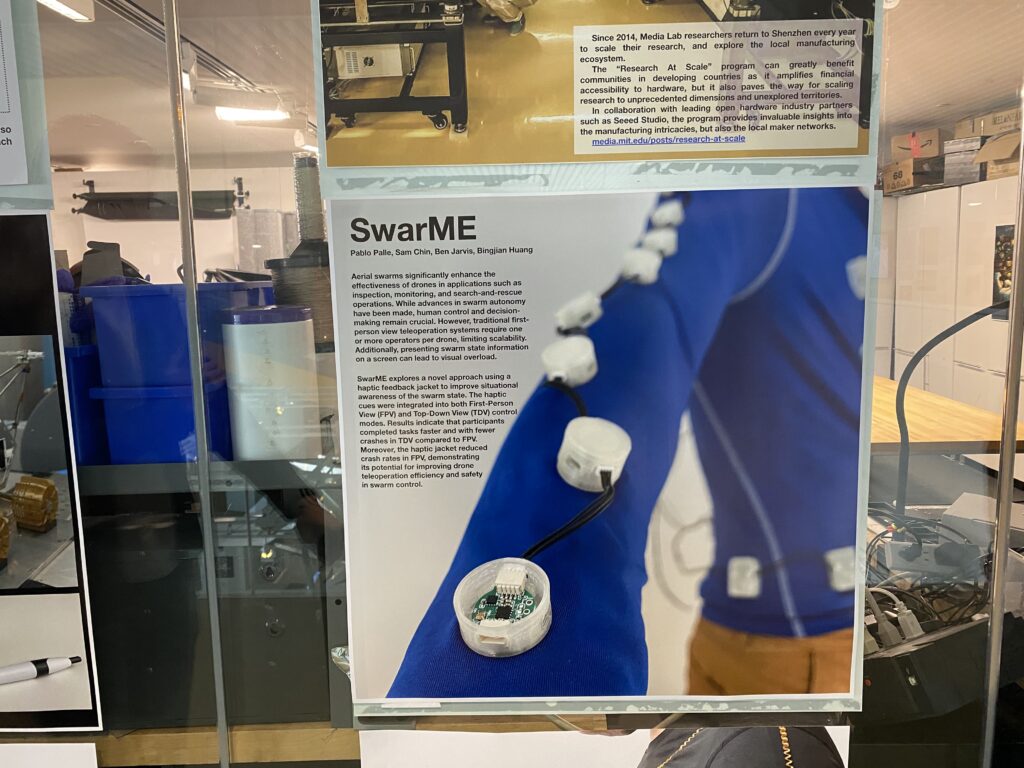

This jacket was designed to control drone swarms, but maybe some of the same technology could be used to provide haptic feedback for deaf people attending concerts like the initiatives that were highlighted at the Able Assembly.

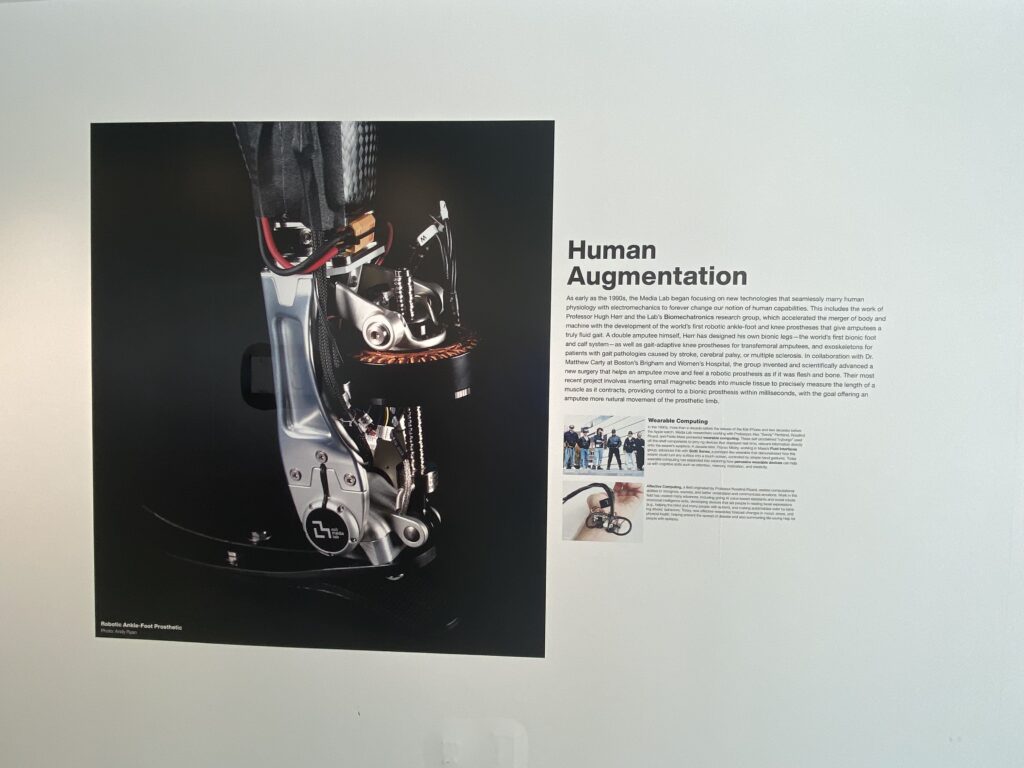

The MIT Media Lab’s work on human augmentation reminded us of the work done by Gil Weinstein at Georgia Tech in building a prosthetic arm for Jason Barnes leading him to found Cybernetic Sound.

MIT Media Lab Visit Wrap Up

We had a great meeting with Perry and Lancelot and brought them up to speed on the latest advances in MIDI 2.0.

They were really interested in using the new MIDI 2 Transport specification .

We also agreed that we should get them access to new specifications under development by them joining The MIDI In Music Education Special Interest Group.

We are excited about all the work that the MIT Media Lab is doing and are looking forward to further interactions in the future.