Designing AI VSTs for Generative Audio Workstations

DAW plugins have been approaching a saturation point over the past decade. Reskinned VSTs seem to outnumber innovative music software by a margin of ten to one. How many new delay and reverb effect plugins do we need? Generative AI and machine learning models are bringing a wave of novelty into the music software ecosystem. But there’s a problem; most of these applications lack the plugin UI that we’ve grown accustomed to.

These new models are built, summarized in papers on Arxiv, promoted on Twitter, and circulated through a small community of AI enthusiasts. Only a few AI models have been productized and marketed to musicians.

Meanwhile, instant-song generators like Boomy and Soundraw are dominating public perceptions of AI music. These browser apps have been backed by venture capital and go after the royalty-free music market. Their marketing appeals to content creators who would normally shop for uaiod at Artlist, Epidemic, and SoundStripe. These browser apps aren’t designed for digital audio workflows.

The majority of AI music models are housed in open source Github repositories. Loading a model on your personal computer can be time and resource intensive, so they tend to be run on cloud services like Hugging Face or Google Colab instead. Some programmers are generous enough to share access to one of these spaces.

To overcome this problem, companies will need to start hiring designers and programmers who can make AI models more accessible.

In this article, I’ll share a few exclusive interviews that we held with AI VST developers at Neutone and Samplab. I was also fortunate to speak with Voger, a prolific plugin design company, to get their take on emerging trends in this space. For reader who want to learn more about AI models and innovative plugins will find a list at the end of this piece.

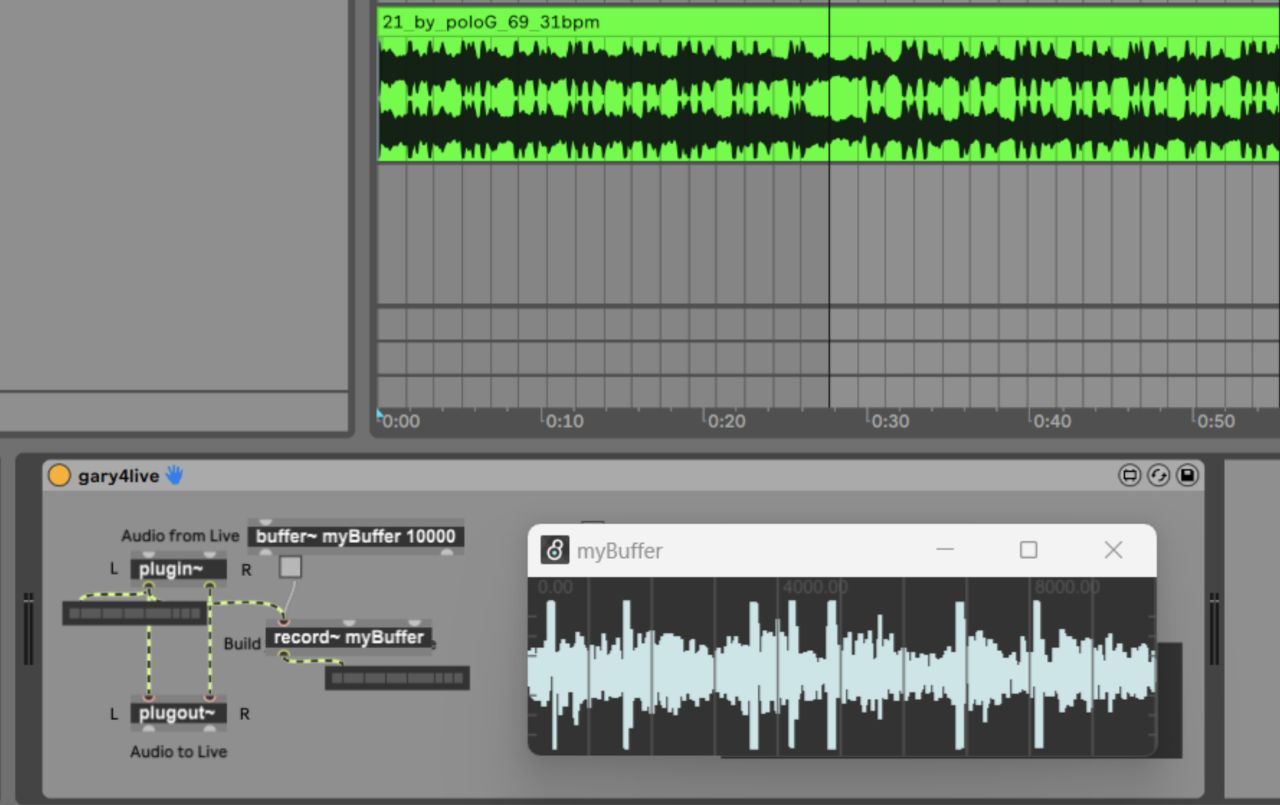

Early efforts to build AI music devices in Max for Live

Ableton Max For Live is currently one of the few plugin formats that easily loads AI models directly in a DAW. The device shown below, shared by Kev at The Collabage Patch, leverages MusicGen‘s buffer feature to generate AI music in Ableton.

This next Max For Live device is called Text2Synth and was programmed by an acquaintance who I met through Twitter, named Jake (@Naughtttt). He created an AI text prompt system that generates wavetable synths based on descriptions of the sound you’re looking for.

Generative audio workstations: Designing UX/UI for AI models

The idea of a generative audio workstation (GAW) was popularized by Samim Winiger, CEO at generative AI music company Okio. His team is actively building a suite of generative music software and we hope see more from them in the coming year.

A handful of AI DAW plugins are already available commercially. AudioCipher published a deep-dive on generative audio workstations last month that explored some of the most popular AI tools in depth. Instead of regurgitating that list, I’ve interviewed software developers and a design team to hear what they have to say about this niche. To kick things off, let’s have a look at Samplab.

Samplab 2: Creating and marketing an AI Audio-to-MIDI VST

Samplab is an AI-powered audio-to-MIDI plugin that offers stem separation, MIDI transcription, and chord recognition. Once the audio is transcribed, users can adjust notes on a MIDI piano roll to change the individual pitch values of the raw audio. Their founder Gian-Marco Hutter has become a close personal friend over the past year and I was excited to learn more about his company’s backstory.

1. Can you tell us about your background in machine learning and how Samplab got started?

We are two friends who met during our studies in electrical engineering and focused on machine learning while pursuing our masters degree. Thanks to co-founder Manuel Fritsche‘s research in the field, we were able to receive support from the Swiss government through a grant aimed at helping academic projects to become viable businesses. Through this program we could do research on our technology while being employed at the university.

2. How far along were you with development when you decided to turn Samplab into a commercial product?

We explored various applications of AI in music production to find out where we could provide the most value. At first, we launched a simple VST plugin and gave it out for free to get some people to test it. To our surprise, it was picked up by many blogs and spread faster than we anticipated. From that point on, we knew that we were onto something and built more advanced features on top of the free tier.

3. What were some of the biggest design challenges you faced while building Samplab 2?

It’s hard to pinpoint a single challenge, as we had to overcome so many. Our AI model can’t be run locally on a computer in a reasonable time, so we decided to provide an online service and run the frontend in a VST plugin or a standalone desktop app. Making the backend run efficiently and keeping the user interface clean and simple were all quite challenging tasks.

4. What Samplab features are you most proud of?

When working with polyphonic material, our audio-to-midi is at the cutting edge and it still keeps improving. We’re proud of our ability to change single notes, even in complex audio, and completely rearrange what’s played in samples. We’re also super happy every time we get an email from a user giving us positive feedback.

Check out Samplab’s website here.

Neutone: A plugin hub for 3rd party AI music models

Next up is Neutone’s CTO, Andrew Fyfe. We reached out to learn more about their company’s mission and design philosophy.

The Neutone plugin acts as a hub for in-house and third-party AI models in the music and audio space. Typically these services would be hosted in a web browser or run locally on an advanced user’s machine. To create a hub like this, Neutone’s design team needed to work closely with each model and expose its core features, to make sure they run properly within any DAW.

1. Neutone has taken a unique approach to AI music software, acting as a hub for multiple AI models. What were some of the key considerations when designing the “main view” that displays the list of models?

When considering the model browser design, we wanted to make it easy for users to stay up to date with newly released AI models. Accessibility to cutting-edge AI tech for artists is one of the major pillars of Neutone so we focused on making the workflow simple and intuitive! One of the biggest advantages of Neutone is that the browser hooks into our online model repository so we can keep it up to date with the latest models we release.

Users download modules straight into the plugin, sort of like Output’s Arcade but for AI models instead of sample libraries! We also wanted to make sure that we credit the AI model creators appropriately, so this information is upfront and visible when navigating the browser. Additional search utilities and tags allow users to easily navigate the browser panel to find the model they are looking for.

2. When users make their selection, they see different interfaces depending on the core functionality of that model. How does your team arrive at the designs for each screen? For example, do you have in-house UX/UI designers that determine the most important features to surface and then design around that? Or do the model’s creators tend to serve up wireframes to you to expand upon?

We worked closely with our UI/UX designer and frontend team to put together the most generalized, intuitive, and slickest looking interface possible for our use-case. We wanted to create an interface that could adapt to various kinds of neural audio models. It needed to be able to communicate these changes to the user but keep the user interaction consistent. The knobs that we expose update their labels based on the control parameters of the equipped model.

We use our Neutone SDK to enforce some requirements on model developers for compatibility with our plugin. Artists and researchers can leverage our Python SDK to wrap and export their neural audio models to load into the plugin at runtime. We also warmly accept submissions from our community so that we can host them on our online repo for all to access!

3. Were there any special considerations when designing the color pallet and aesthetic of the app? I noticed that each model interface feels coherent with the others, so I’m wondering if you have brand guidelines in place to ensure the app maintains a unified feel.

On Neutone’s conception we imagined it not only being a product but an impactful brand that held its own amongst the other audio software brands out there. We are pioneering new kinds of technology and making neural audio more accessible to creators, so we wanted to marry this bold mission with a bold brand and product identity. We worked closely with our graphic designer to achieve our current look and feel and established brand guidelines to maintain visual consistency across our website and product range. The Neutone platform is only the beginning, and we have many more tools for artists coming soon!

Check out the Neutone website here.

Voger & UI Mother: 15 years of music plugin interface design

To round things out, I spoke with Nataliia Hera of Voger Design. She’s an expert in UX/UI design for DAW plugins. Her interfaces have been used by major software products including Kontakt, Propellerhead’s Reason, and Antares. So of course we had to get her take on the future of design for AI VSTs.

Nataliia is also the founder of UI Mother, a music construction kit marketplace for plugin developers who want to assemble high quality interfaces, without paying the usual premium for custom designs.

1. I’m excited to understand your design philosophy. But first, can you share some of the companies you’ve built plugin interfaces for?

2. I read that you started the company with your husband more than ten years ago. Can you tell us about your design background personally? What inspired you two to begin creating interfaces and music business together?

We’re self-taught in design, starting from scratch 15 years ago with no startup funds or educational resources, especially in audio plugin design, which was relatively new. We pieced together knowledge from various sources, learning by studying and analyzing designs, not limited to audio.

Our journey began when Vitaly tried using the REAPER DAW and saw the chance to improve its graphics, which weren’t appealing compared to competitors. Creating themes for REAPER garnered positive user feedback and prompted Vitaly to explore professional audio design, given the industry’s demand.

As for me, I lacked a design background initially but learned over the years, collaborating with my husband, an art director. We revamped REAPER themes successfully, alongside with plugins and Kontakt libraries GUI leading to a growing portfolio. About six months later, we established our design agency, filling a need for quality audio program design.

Today, I’ve acquired skills in graphic design, branding, product creation processes, and graphics design, thanks to our extensive library of knowledge and years of practice.

3. What does your design process look like when starting with a new client? For example, do they typically give you a quick sketch to guide the wireframe? I noticed that your portfolio included wireframes and polished designs. How do you work together to deliver a final product that matches the aesthetic they’re looking for?

It is interesting, but from the outside, the client sees the process as working with a design agency. I compared the processes while talking to the agency owners I know and when I ordered a design service. The client gives data as they are. As usual, it can be some scratch, the list of controls, and sometimes, it may be an example of what the client wants to achieve in style.

As usual, the process may look like this:

- We provide research on the features and how to design them in layout or style.

- Then, we create a mood board and interactive layout.

- Then, it’s time for the style creation.

- At the delivery stage, we prepare files for implementation into code, animation, etc.

- We design everything in an art or animated clip for the site or a video.

All the related things I do and implement help us to improve the result. For example, we implemented an inner standard for each level to decrease the risks when the client gets the result he didn’t expect.

The same things are true about the KPI and all other business instruments. All of them are focused on the client to get all he needs in time. Now I say “he needs,” but there is a difference between “he needs” and “he wants”. It is another story – typical client’s creative process, you know. We even have some kind of client scale where we foresee the risks of creating hard, creative things that need time, iteration, and sorting out variants in the conditions of often conflicting or unchanged data in the technical task. It is normal!

4. Voger designs more than just flat user interfaces. Your portfolio includes some beautiful 3D-modeled interfaces. Are these used to promote the applications on websites and advertisements? What software do you use?

3D technology is very important in creating the most expensive and attractive designs for an audio sphere. This applies to a promo or a presentation and primarily to the UI itself. The biggest part of the most attractive photo-realistic designs of audio plugins you have seen for the last 20 years were either partially or completely created with the help of 3D technologies. Because only 3D technologies can give us believable shadows, lights and materials for objects.

Plausibility and photo-realism are essential for UI audio plugins. Firstly, people used to see and use familiar and legendary hardware devices (synthesizers, drum machines, effects and others). All of these devices have recognizable, unique design styles and ways of use (for example, familiar to all: Juno 106, TR-808 or LA-2A).

We all live in the real world, so even ordinary flat UI tries to repeat the real world with the help of shadows, imitation of real light and real materials like wood, plastic or metal (by the way, that is the reason Flat UI in iOS7 failed, but this is material for another interview). Actually, there is no difference in the kinds of programs. Any modern and adequate 3D editor can create a cool audio design. But we have been using only Blender 3D for these years. It is free, stable, doesn’t cause problems, and we can always rely on it (which can not be said, for example, about Adobe and similar companies).

5. If a client wants to build a physical synth, are you able to create 3D models that they can hand off to a manufacturer?

Theoretically, yes, why not? But, practically, it all depends on a project, and we cannot influence many co-factors as the clients have not given us such an opportunity yet. I am talking about the engineering part with inner components like types of faders, potentiometers, transformers, chip location etc. We understand clients in such situations, we are not engineers to design schemes, but an exterior design made of 5 types of knobs and three types of faders available on eBay cannot be claimed as extremely complex. Still, we have experience creating a couple of such hardware devices. Unfortunately, we cannot disclose them because of NDA, as it is still under production, but one will appear very soon, and you will definitely hear about it.

6. There’s recently been a shift toward artificial intelligence in generative music software. These AI models typically run on services like Hugging Face, Google Colab, or on a skilled user’s personal computer. There are some amazing services out there that have no interface at all. I think these engineers don’t know where to go to turn their software into usable product interfaces.

Firstly, we’re a long way from achieving true AI (maybe 20 or even 40 years away). Right now, our “AI” isn’t quite the cool, creative intelligence we imagine. It’s more like trying to spread butter with a flint knife. If AI-generated designs flood the market, they’ll all look alike since they’re based on the same internet data. Soon, people will want something new, and we’ll start the cycle again. The idea of becoming AI’s foundation is intriguing. We’d love to see what the VOGER design, averaged over time by a neural model, looks like. Will it be light or dark? My art director is grinning; he knows something!

7. I noticed that you have a second company called UI mother, that sells music interface construction kits. Can you talk about how the service differs from Voger? What is the vision for this company?

All is simple. Here, we are talking about a more democratic and budget-friendly model of getting a cool design for audio software than VOGER. Let us imagine the developers who want to try independent design creation but don’t have enough money to pay a professional team of designers. Or they risk hiring unknown freelancers who can let them down in an unexpected moment. These developers can get professional and high-quality graphics in a form of a UI kit optimized for audio programs, including many nuances of this sphere, like frame-by-frame animation, stripes, different screen sizes, etc. These clients are often independent developers or experimentalists. Sometimes, it can be small companies that need to test an idea or to create some simple, even free product.

Check out Voger Design to see more of their work and UI Mother to explore their VST UI construction kits.

What kind of AI models would benefit from a plugin interface?

To wrap up this article, let’s have a look at some of the most interesting AI models in circulation and try to identify programs that don’t have an interface yet. I’ve organized them by category and as you’ll see, there’s a broad spread of options for the new plugin market:

- Text prompt systems: We’ve seen several text-to-music and text-to-audio models emerge this year. The big ones are MusicGen, MusicLM, Stable Audio, Chirp, and Jen-1 but none of them offer a DAW plugin.

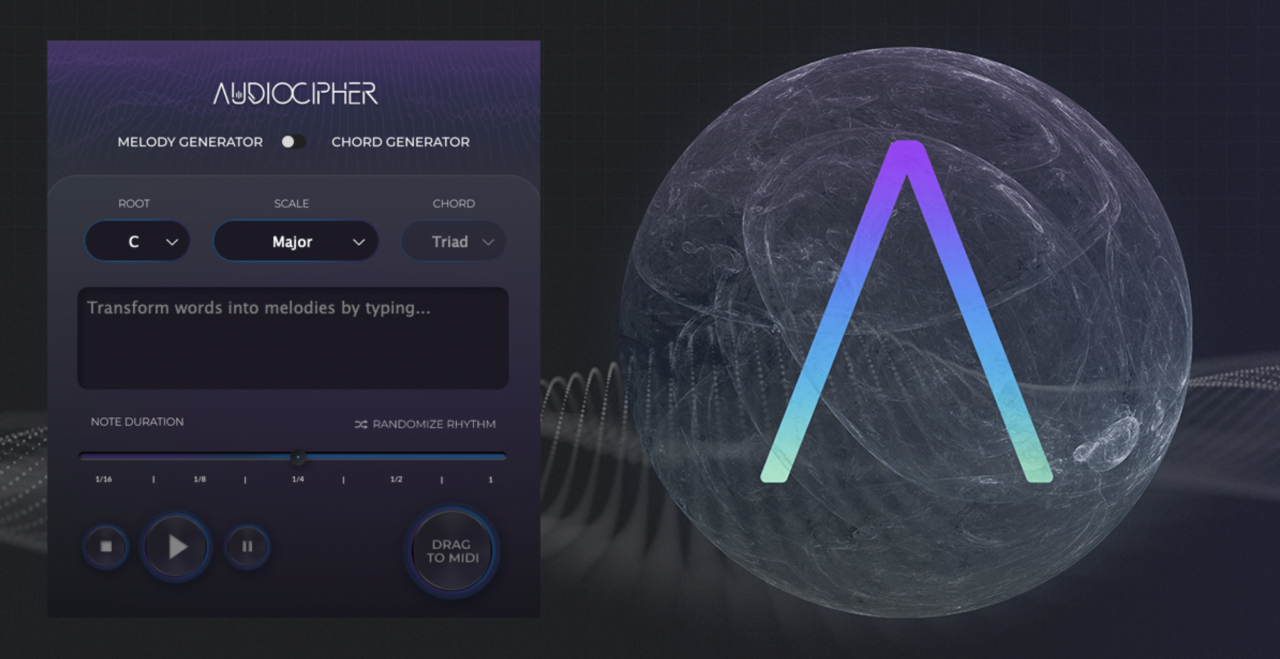

- AudioCipher launched a text-to-MIDI plugin in 2020 and is currently on version 3. The algorithm generates melodies and chord progressions, with rhythm automation and layers of randomization. It’s not running on an AI model currently but it will in the future, as the current generative text-to-MIDI models mature. Our team is partnered with a developer who is solving this. In the mean time, version 4.0 is due for release before end of 2023 and existing customers get free lifetime version upgrades.

- WavTool’s GPT-4 powered AI DAW operates in a web browser but doesn’t offer a desktop plugin yet.

- We previously published a MIDI.ORG interview with Microsoft’s Pete Brown regarding the Muzic initiative at Microsoft Research. Their MuseCoCo text-to-MIDI model does not have a user interface.

- The music editing model InstructME supports text prompts requesting individual instrument layers to be added, removed, extracted and replaced.

- Audio-to-MIDI: As we already pointed out, Samplab is currently the most prominent audio-to-MIDI provider and the only VST on the market. The Spotify AI service Basic Pitch also offers this functionality, but only in a web browser.

- AI Melody Generators: There are already several VSTs solving melody generation, usually through randomization algorithms. However, we were able to identify over a dozen AI melody generation models in the public domain.

- In August 2023, a melody generator called Tunesformer was announced.

- ParkR specializes in jazz solo generation and has been maintained through present day.

- Some models, like the barely-pronounceable H-EC2-VAE, take in chord progressions and generate melodies to fit them.

- AI Chord Generators: Chord progression generators and polyphonic composing programs are also very popular points of focus among AI model developers.

- A diffusion model called Polyffusion, announced in July 2023, generates single track polyphonic MIDI music.

- A second model called LHVAE also came out in July and builds chords around existing melodies.

- SongDriver‘s model builds entire instrumental arrangements around a melody.

- AI bass lines: There’s a fine line between writing melodies and bass lines, but they are arguably different enough to warrant its own section. SonyCSL created the BassNet model to address this niche.

- Compose melodies for lyrics: At the moment, Chirp is the best lyric-to-song generator on the market, but other models like ROC have attempted to solve the same problem.

- Parameter inference for synths: Wondering which knobs to twist in order to get that trademark sound from your favorite artist? The AI model Syntheon identifies wavetable parameters of sampled audio to save audio engineers time.

- Music information retrieval: Machine learning has long been used to analyze tracks and pull out important details like tempo, beats, key signature, chords, and more.

- Music Structure Analyzer offers an open source solution for this.

- I previously wrote an article about infinite songs, showcasing how music captioning services like Music Understanding Llama and LP MusicCaps can be used to generate rich descriptions of music that feed back into text-to-music generators.

- AI Voices: Text to speech has been popular for a long time. Music software companies like Yamaha have started building AI voice generators for singing and rapping. The neural vocoder model BigVSAN uses “slicing adversarial networks” to improve the quality of these AI voices.

- AI Drumming: Songwriters who need a percussion track but struggle with writing drum parts could benefit from automation.

- Generative rhythmic tools like VAEDER by Okio’s product manager Julian Lenz and supervised by Behzad Haki.

- The JukeDrummer model was designed to detect beats in an audio file and generate drums around it.

- Nutall’s model focused on creating MIDI drum pattern specifically.

- Drum Loop AI created a sequencer interface that runs Google Magenta on its backend and exports MIDI or audio files

- For plug user interface inspiration, check out our article on Drum VSTs.

- Stem Separation: There are several popular stem separators on the market already, like VocalRemover’s Splitter AI. In the future, it would be helpful for plugins to offer this service directly in the audio workstation.

- Timbre and style transfer: An ex-Google AI audio programmer, Hanoi Hantrakul, was hired by TikTok to create a high resolution instrument-to-instrument timbre transfer plugin called MAWF. The plugin is available in beta and currently transfers to four instruments. Neutone supports similar features including Google’s DDSP model. A second model called Groove2Groove attempts style transfer across entire tracks.

- Audio for Video: This article has focused on DAWs, but AI music models could also be designed to plug into video editors.

- The award-winning sound design DAW Audio Design Desk recently launched a generative AI company called MAKR with a text-to-music service called SoundGen that will load in their video editor and integrate with other video editors like Final Cut Pro. Ultimately, this could lead to a revolution in AI film scoring.

- A vision-to-audio model called V2A Mapper turns videos into music, analyzing the on-screen events and generating sound effects with a popular open source model called AudioLDM.

- AI music video software like Neural Frames uses AI stem separation and transients to modulate generative imagery.

- Emotion-to-Audio: AI-assisted game design researcher Antonio Liapis published an Arxiv paper earlier this year detailing a system that models emotions using valence, energy and tension. His system focuses on horror soundscapes for games. Berkeley researchers went so far as to record audio directly from the brain and at AudioCipher we speculated how this could be used to record dream songs.

- Improvised collaboration: One of Google Magenta’s earliest efforts was called AI Duet. This concept has existed in the public domain for decades, like the computer-cello duet scene in the movie Electric Dreams. We’ve penned a full article outlining scenarios and existing software that could provide an AI bandmate experience.

This list covers the majority of AI music model categories that we’ve encountered. I’m sure there are plenty other ones out there and there may even be entire categories that I missed. The field is evolving on a monthly basis, which is part of the reason we find this field so exciting.

About the author: Ezra Sandzer-Bell is the founder of AudioCipher Technologies and serves as a software marketing consultant for generative music companies.