CES 2026: MIDI Gear to Watch—and the Accessibility Tech That Can Change How We Make Music

CES 2026 ran January 6–9, 2026 in Las Vegas, and once again the show floor was full of ideas that matter to music creators: new ways to control software, new approaches to wireless “instant play,” and (most importantly) a fast-growing wave of accessibility-first products—Braille, tactile graphics, haptics, smart glasses, and autonomous navigation—built to help more people participate in everyday life.

This CES 2026 roundup is written for the MIDI community with two lenses:

- MIDI products and creator tools first—things that can become controllers, instruments, or workflow accelerators.

- Accessibility next—especially Braille and touch-based interfaces that can directly influence accessible music-making.

MIDI and creator tech highlights

modue — modular control surface thinking (and MIDI integration)

modue positions itself as a modular control deck—touchscreen, motorized sliders, keys, and encoders—designed to control apps for audio/video and beyond. For MIDI creators, the key detail is that modue explicitly calls out MIDI integration as part of its control story, which naturally fits DAWs, live rigs, and even lighting/DMX workflows that already speak MIDI/OSC.

Why it matters for MIDI: modular hardware control is back in a big way, and modue’s “building-block” approach maps well to how modern creators work—different layouts for tracking, mixing, performance, and accessibility-friendly setups (bigger targets, fewer layers, tactile + motor feedback).

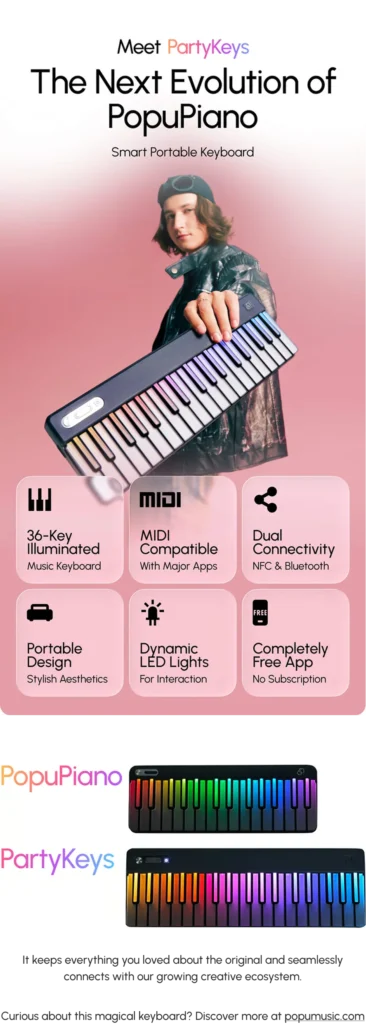

PartyKeys & PartyStudio — “instant band” hardware with wireless MIDI

PartyKeys markets a light-guided keyboard controller designed for “follow the lights” play.

PartyStudio is pitched as a wireless MIDI synth speaker with built-in sounds and rhythms. The concept is musician-friendly and accessibility-adjacent: lower friction, fewer barriers, and an emphasis on participation over perfection.

MusicRadar’s early coverage highlights practical specs that matter to MIDI users: multiple MIDI connections, low-latency wireless performance, built-in sounds/rhythms, and an “all-in-one” approach that can reduce setup complexity for classrooms, jams, and inclusive music activities.

Music Radar Party Studio review

Why it matters for MIDI: “instant play” systems can be powerful on-ramps—especially when paired with adaptive teaching, simplified control, and accessible content design.

PopuMusic is our newest MIDI Association member.

Piano LED Plus 2026 — MIDI-driven learning with visual guidance

Piano LED Plus 2026 is an LED guidance system that connects to a piano via USB-to-host or MIDI and works with an app so keys light up “note-by-note.” It’s explicitly positioned as MIDI/USB-connected and designed to guide practice while detecting errors and waiting for correct notes—an interesting example of a learning interface where MIDI becomes the bridge between instruction and physical action.

Why it matters for MIDI: when MIDI is used as the learning “plumbing,” it becomes easier to build alternate feedback loops—color, haptics, audio cues, or simplified note ranges—depending on the learner’s needs.

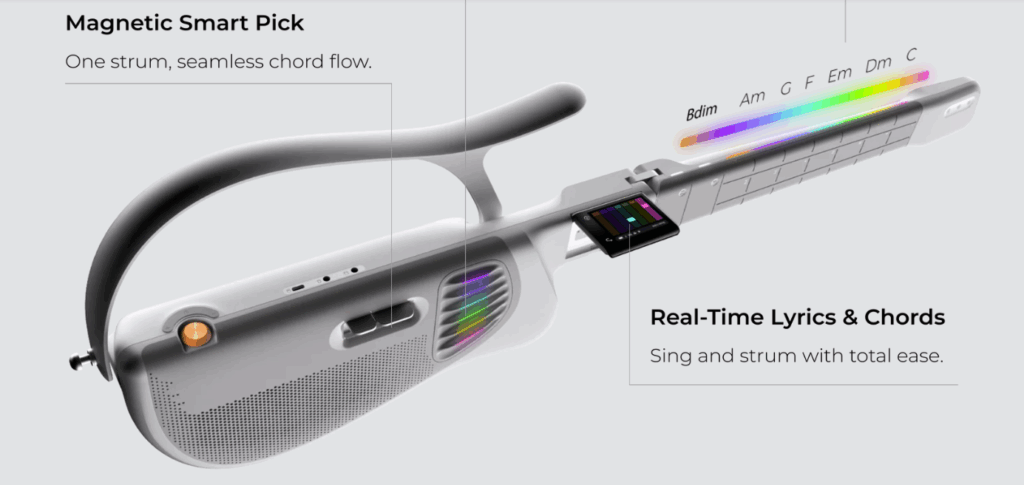

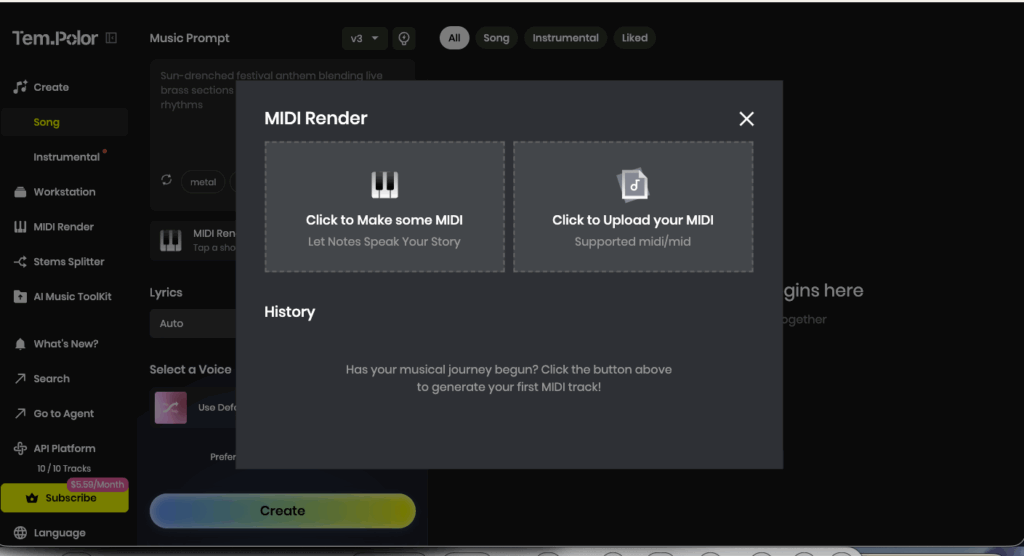

TemPolor Melo-D: a “generative AI guitar” that turns humming, chords, and prompts into playable parts

TemPolor Melo-D is positioned as “the world’s first generative AI guitar,” built around the idea that AI can act as a real-time collaborator—not just a production tool. Instead of asking players to master complex shapes first, Melo-D aims to generate playable guitar parts (and guidance) from simple inputs like a hummed melody, a chord idea, or a text prompt.

What it does (according to TemPolor and design-award documentation):

- Hum-to-Solo / Hum-to-Song: hum an idea and Melo-D generates a guitar melody in seconds, synced to the instrument.

- Text-to-Song and Chords-to-Song: create “complete tracks” from text or chord inputs, including lyrics and AI vocals (as described on TemPolor’s product pages).

- Three-mode concept: (1) Solo Mode that turns favorite songs into fingerstyle melodies via generative AI, (2) Sing-along Mode with an AI vocal guide for pitch/timing, and (3) AI Creation Mode that composes original songs based on mood/style descriptions.

How you interact with it: Melo-D emphasizes “on-instrument” guidance—an HD flip-up display plus a Rhythm Game Mode (explicitly compared to Guitar Hero-style play) so you can follow along without staring at a phone. The neck includes LED fret guidance with 7-key and 21-key modes, positioned as a skill-adaptive layout that can scale from beginner to more advanced use.

Sound + feel claims: TemPolor describes an “infinite” AI tone approach (“endless tones in real time”) and highlights an immersive/Hi-Res sound direction via a built-in acoustic chamber. Award documentation also mentions built-in tones, an auto drum-machine element, and an “arpeggio pad” meant to emulate string feel.

Availability notes: the current page you shared is a reservation/deposit flow (not a direct product purchase), describing a $29 refundable deposit tied to a Kickstarter launch window and an “early” price of $449 vs an $599 MSRP, with shipping timelines noted around late March/early April 2026.

https://tempolorguitar.com/products/he-world-s-first-generative-ai-guitar

The Tempolor guitar uses The MIDI Association member Dream chip for it’s sounds and the AI Tempolor app supports MIDI.

bHaptics — full-body haptics as a feedback channel for creators

bHaptics is best known for consumer-ready full-body haptic gear (vests/suits) used in XR and gaming. Their product pages describe vests with multiple motors and broad software/content compatibility, plus audio-to-haptics options. For music, the interesting angle is not “MIDI hardware” directly, but the opportunity for haptics as a performance and accessibility feedback channel: feel timing, feel structure, feel cues—without relying entirely on sight or hearing.

Why it matters for MIDI: haptics can complement accessible music-making—think tactile metronomes, “section change” cues, or physical confirmation of parameter moves in a live set.

Accessibility at CES 2026: Braille, tactile graphics, vision tools, mobility, and neurodiversity support

CES has become a serious venue for disability tech—especially products that translate the visual world into touch or audio, and products that reduce the “setup cost” of independence. For the MIDI community, the most exciting trend is the rise of touch-based information interfaces (Braille, tactile graphics, haptics), because music hardware and software are still heavily visual.

Dot Inc. — Dot Pad and tactile graphics at scale

Dot Inc. is one of the most important companies to watch in tactile displays. The Dot Pad is positioned as a device that lets blind and low-vision users explore content as tactile graphics and multi-line Braille via Bluetooth, including support for PDFs, images, and more through companion software like Dot Canvas.

We were told to find this booth by Jay Pocknell from our Music Accessibility SIG because one of the leaders at Dot Inc used to work at the Royal Institute for The Blind where Jay is still working.

Broader reporting has highlighted how multi-line tactile displays can improve access to diagrams, math, and spatial information—exactly the kinds of things that are hard to convey via audio alone (and very relevant to music tech UIs).

MIDI relevance: imagine a future where synth editors, routing matrices, step sequencers, mixing views, and chord/scale maps can be rendered as tactile layouts. The underlying pattern—“visual UI translated into touch”—is directly aligned with where accessible music tools need to go.

MIDI relevance: labeling is a huge part of accessibility for music gear—hardware synths, controllers, mixers, interfaces. A portable Braille label workflow can help musicians quickly label controls, ports, pedals, and patch bays in a consistent, personal system.

PocketDot — reading and practicing Braille on your phone

PocketDot describes itself as a “cutting-edge” Braille device aimed at making it easier to read and practice Braille using a phone-centric workflow.

We had a great talk with the founder who even suggested that it would be easy to turn the PocketDot into a MIDI controller!

MIDI relevance: phone-first Braille tools could become companions to music apps—quick labeling, learning, and reference while building accessible workflows in studios and classrooms.

Mangoslab — Nemonic Dot (AI Braille label printing)

Nemonic Dot is presented as a portable Braille label printer + mobile app that makes Braille creation accessible on demand, including multilingual conversion to Braille and support for standard Braille formats. The CES Innovation Awards page describes the system as a complete workflow (app + printer), explicitly focusing on real-world labeling needs.

https://www.ces.tech/ces-innovation-awards/2026/nemonic-dot

HapWare — computer vision + haptics for social cues

HapWare says it is building wearable assistive technology for blind, low-vision, deaf-blind, and autistic users, combining computer vision and haptic feedback. Recent coverage highlights a product concept that pairs a haptic wristband with smart glasses to convey information through tactile signals.

MIDI relevance: haptic “meaning signals” can be applied to music contexts—gesture confirmation, stage cues, ensemble timing, or even tactile UI navigation for software instruments and mixers.

AGIGA — EchoVision smart glasses for blind/low-vision users

EchoVision is positioned as smart glasses designed “with and for” blind and low-vision users, emphasizing hands-free access to visual information and independence. [oai_citation:15‡echovision.agiga.ai](https://echovision.agiga.ai/?utm_source=chatgpt.com)

MIDI relevance: assistive vision can support music tasks that are still visually dense: reading small device screens, identifying ports/cables, navigating stage environments, and verifying setup states in a live rig.

AidALL — Bedivere autonomous navigation companion

AidALL is listed in the CES exhibitor directory under accessibility/AI/robotics categories. Their product information describes Bedivere as an on-device AI-powered modular robot form factor, and the CES Innovation Awards page frames it as an autonomous mobility companion for visually impaired users.

- Find them at CES 2026: AidALL Inc. — Venetian Expo, Hall G — Booth 62643.

MIDI relevance: while not “music tech,” mobility independence affects access to gigs, rehearsals, classes, and studio work. It’s also part of the bigger picture of inclusive participation in music communities.

NewHaptics + Monarch — multi-line Braille and tactile display momentum

NewHaptics has published technical discussion around why multi-line Braille displays matter and references the broader ecosystem of devices and partnerships pushing this category forward. Meanwhile, the Monarch product is described as a multi-line Braille device that integrates tactile graphics with Braille on a 10-line by 32-cell refreshable display.

MIDI relevance: if tactile “pages” become more common, there’s an opportunity to rethink how complex musical information is presented—arrangements, automation lanes, patch graphs, routing views, and educational diagrams.

Auria Robotics — autism-tailored AI companion

Auria presents itself as an AI-powered robotic companion for autism intervention, emphasizing affordability, accessibility, and personalized support.

MIDI relevance: neurodiversity-aware tools and supports can intersect with music education and therapy, and CES is increasingly a place where those crossovers appear.

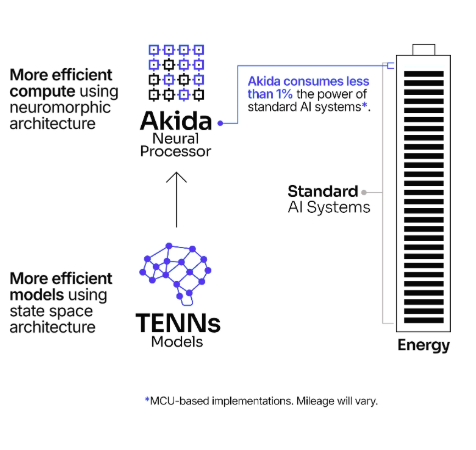

Brain interfaces and on-device AI (BraineuLink + BrainChip)

BraineuLink describes work on non-invasive EEG brain-computer interfaces for decoding user intentions and interfacing with digital devices, while BrainChip describes its Akida neuromorphic processor platform for low-power, real-time edge AI.

MIDI relevance: these are enabling technologies: lower-power on-device perception and alternate input methods are exactly what’s needed for future accessible instruments, adaptive controllers, and context-aware performance rigs.

What the MIDI community should take from CES 2026

1) Accessibility is becoming “interface innovation,” not a side category

Braille labels, tactile graphics displays, haptic wearables, and assistive smart glasses are all part of a single story: making information usable in more than one sensory channel. That’s a perfect match for music technology, where too many workflows still assume full vision + fine motor control + dense, layered screens.

2) Tactile and haptic feedback is a major opportunity for instruments and controllers

The rise of devices like Dot Pad (tactile graphics) and consumer haptics (bHaptics) suggests a future where music tools can “speak touch” as naturally as they speak sound.

3) Lower friction helps everyone

PartyKeys/PartyStudio-style “instant play” concepts and MIDI-driven learning tools like Piano LED Plus show how reducing setup complexity can open doors—especially in music education and inclusive group settings.

If you’re not already connected, this is exactly the kind of CES signal that aligns with The MIDI Association’s ongoing accessibility work and community-building.

Auracast and what it means for accessibility

We plan on another article to focus on a big tech movement happening at CES that affects accessibility – Auracast.

Auracast (Bluetooth SIG) is a new Bluetooth LE Audio technology that allows one device to broadcast high-quality audio to an unlimited number of nearby devices, like a personal radio station, enabling seamless sharing and much-needed assistive listening in public spaces like airports, gyms, and theaters. It works by transmitting audio from a source (TV, phone, system) to multiple receivers (hearing aids, earbuds, speakers) without complex pairing, making public audio more accessible and personal, while also allowing private audio sharing between friends.

This video provides a brief overview of how Auracast works:

How it works

- Broadcasting: An Auracast transmitter (e.g., a public TV, phone) sends audio streams.

- Receiving: Auracast-enabled devices (hearing aids, earbuds) scan for available broadcasts, similar to Wi-Fi networks.

- Connecting: Users select a broadcast channel (and sometimes a passcode) to tune in directly, getting clear audio without interference.

Key features & benefits

- Accessibility: Delivers audio directly to hearing aids in public venues, replacing older, less effective systems.

- One-to-Many Sharing: Share music or video audio with multiple friends at once from a single source device.

- Low Energy: Uses Bluetooth LE (Low Energy) for lower power consumption, preserving battery life.

- Future-Proof: Part of the Bluetooth 5.2 standard, ensuring broad compatibility.

CES- Robots and AI

If we were asked to sum up CES, it would be two words -Robots and AI. Of course many of the advances in robots would not be possible with AI.

Here is a photo gallery of the CES show including many photos related to the two words above.