About MIDI-Part 1:Overview

MIDI (pronounced “mid-e”) is a technology that makes creating, playing, or just learning about music easier and more rewarding. Playing a musical instrument can provide a lifetime of enjoyment and friendship. Whether your goal is to play in a band, or you just want to perform privately in your home, or you want to develop your skills as a music composer or arranger, MIDI can help.

How Does MIDI Work?

There are many different kinds of devices that use MIDI, from cell phones to digital music instruments to personal computers. The one thing all MIDI devices have in common is that they speak the “language” of MIDI. This language describes the process of playing music in much the same manner as sheet music: there are MIDI Messages that describe what notes are to be played and for how long, as well as the tempo, which instruments are to be played, and at what relative volumes.

MIDI is not audio.

MIDI is not audio. So if someone says MIDI sounds bad, they really don’t understand how MIDI works. Imagine if you took sheet music of a work by Beethoven and handed it to someone who can read music, but has never played the violin. Then you put in their hands a very cheap violin. The music would probably sound bad. Now take that same piece of sheet music and hand it to the first chair of a symphony orchestra playing a Stradivarius and it will sound wonderful. So MIDI depends on the quality of playback device and also also how well the description of the music fits that player.

MIDI is flexible

The fact that MIDI is a descriptive language provides tremendous flexibility.Because MIDI data is only performance instructions and not a digital version of a sound recording, it is actually possible to change the performance, whether that means changing just one note played incorrectly, or changing all of them to perform the song in an entirely new key or at a different tempo, or on different instruments.

MIDI data can be transmitted between MIDI-compatible musical instruments, or stored in a Standard MIDI File for later playback. In either case, the resulting performance will depend on how the receiving device interprets the performance instructions, just as it would in the case of a human performer reading sheet music. The ability to fix, change, add, remove, speed up or slow down any part of a musical performance is exactly why MIDI is so valuable for creating, playing and learning about music.

The Three Parts of MIDI

The original Musical Instrument Digital Interface (MIDI) specification defined a physical connector and message format for connecting devices and controlling them in “real time”. A few years later Standard MIDI Files were developed as a storage format so performance information could be recalled at a later date. The three parts of MIDI are often just referred to as “MIDI “, even though they are distinctly different parts with different characteristics.

1. The MIDI Messages – the software protocol

The MIDI Messages specification (or “MIDI Protocol”) is the most important part of MIDI. The protocol is made up of the MIDI messages that describe the music. There are note messages that tell the MIDI devices what note to play, there are velocity messages that tell the MIDI device how loud to play the note, there are messages to define how bright, long or short a note will be.There are Program Change messages that tell the MIDI device what instrument to play.So by studying and understanding MIDI messages you can learn how to completely describe a piece of music digitally. Look for information about MIDI messages in the “Control” section of Resources.

2. The physical transports for MIDI

Though originally intended just for use with the MIDI DIN transport as a means to connect two keyboards, MIDI messages are now used inside computers and cell phones to generate music, and transported over any number of professional and consumer interfaces (USB, Bluetooth, FireWire, etc.) to a wide variety of MIDI-equipped devices.

There are many different Cables & Connectors that are used to transport MIDI data between devices. Look for specific information in the “Connect” section of Resources.

MIDI is not slow

The “MIDI DIN” transport causes some confusion because it has specific characteristics which some people associate as characteristics of “MIDI” — forgetting that the MIDI-DIN characteristics go away when using MIDI over other transports (and inside a computer). With computers a High Speed Serial, USB or FireWire connection is more common. USB MIDI is significantly faster than 5 pin DIN. Each transport has its own performance characteristics that might make some difference in specific applications, but in general the transport is the least important part of MIDI, as long as it allows you to connect all the devices you want use!

3. The file formats for MIDI files

The final part of MIDI is made up of the Standard MIDI Files (and variants), which are used to distribute music playable on MIDI players of both the hardware and software variety. All popular computer platforms can play MIDI files (*.mid) and there are thousands of web sites offering files for sale or even for free. Anyone can make a MIDI file using commercial (or free) software that is readily available, and many people do. Whether or not you like a specific MIDI file can depend on how well it was created, and how accurately your synthesizer plays the file… not all synthesizers are the same, and unless yours is similar to that of the file composer, what you hear may not be at all what he or she intended. Look in the “Create”section of Resources for information about how to create and use different MIDI file formats.

Even More MIDI

Many people today see MIDI as a way to accomplish something, rather than as a protocol, cable, or file format. For example, many musicians will say they “use MIDI”, “compose in MIDI” or “create MIDI parts”, which means they are sequencing MIDI events for playback via a synthesizer, rather than recording the audio that the synthesizer creates.

About MIDI-Part 2:MIDI Cables & Connectors

Part 2: MIDI Cables & Connectors

Many different “transports” can be used for MIDI messages. The speed of the transport determines how much MIDI data can be carried, and how quickly it will be received.

5-Pin MIDI DIN

Using a 5-pin “DIN” connector, the MIDI DIN transport was developed back in 1983, so it is slow compared to common high-speed digital transports available today, like USB, FireWire, and Ethernet. But MIDI-DIN is almost always still used on a lot of MIDI-equipped devices because it adequately handles communication speed for one device. Also, if you want to connect one MIDI device to another (without a computer), MIDI cables are usually needed.

USB and FireWire

Computers are most often equipped with USB and possibly FireWire connectors, and these are now the most common means of connecting MIDI devices to computers (using appropriate adapters). Adapters can be as simple as a short cable with USB or FireWire connectors on one end and MIDI DIN connectors on the other, or as complex as a 19 inch rack mountable processor with dozens of MIDI and Audio In and Out ports. The best part is that USB and FireWire are “plug-and-play” interfaces which means they generally configure themselves. In most cases, all you need to do is plug in your USB or FireWire MIDI interface and boot up some MIDI software and off you go.

With USB technology, devices must connect to a host (PC), so it is not possible to connect two USB MIDI devices to each other as it is with two MIDI DIN devices. (This could change sometime in the future with new versions of USB). USB-MIDI devices require a “driver” on the PC that knows how the device sends/receives MIDI messages over USB. Most devices follow a specification (“class”) that was defined by the USB-IF; Windows and Mac PCs already come with “class compliant” drivers for devices that follow the USB-IF MIDI specification. For more details, see the article on USB in the Connection area of Resources.

Most FireWire MIDI devices also connect directly to a PC with a host device driver, and the host handles communication between FireWire MIDI devices even if they use different drivers. But FireWire also supports “peer-to-peer” connections, so MMA (along with the 1394TA) produced a specification for transport of MIDI over IEEE-1394 (FireWire), which is available for download on this site (and also part of the IEC-61883 international standard).

Ethernet & WiFi (LAN)

Many people have multiple MIDI instruments and one or more computers (or a desktop computer and a mobile device like an iPad), and would like to connect them all over a local area network (LAN). However, Ethernet and WiFi LANs do not always guarantee on-time delivery of MIDI messages, so MMA has been reluctant to endorse LANs as a recommended alternative to MIDI DIN, USB, and FireWire. That said, there are many LAN-based solutions for MIDI, the most popular being the RTP-MIDI specification which was developed at the IETF in cooperation with MMA Members and the MMA Technical Standards Board. In anticipation of increased use of LANs for audio/video in the future, MMA is also working on recommendations for transporting MIDI using new solutions like the IEEE-1722 Transport Protocol for Time-Sensitive Streams.

Bluetooth

Everything is becoming “mobile”, and music creation is no exception. There are hundreds of music-making software applications for tablets and smart phones, many of which are equipped with Bluetooth “LE” (aka “Smart”) wireless connections. Though Bluetooth technology is similar to WiFi in that it can not always guarantee timely delivery of MIDI data, in some devices Bluetooth takes less battery power to operate than WiFi, and in most cases will be less likely to encounter interference from other devices (because Bluetooth is designed for short distance communication). In 2015 the MMA adopted Bluetooth MIDI LE performance and developed a recommended practice (specification) for MIDI over Bluetooth.

Deprecated Solutions

Sound Cards

It used to be that connecting a MIDI device to a computer meant installing a “sound card” or “MIDI interface” in order to have a MIDI DIN connector on the computer. Because of space limitations, most such cards did not have actual 5-Pin DIN connectors on the card, but provided a special cable with 5-Pin DINs (In and Out) on one end (often connected to the “joystick port”). All such cards need “driver” software to make the MIDI connection work, but there are a few standards that companies follow, including “MPU-401” and “SoundBlaster”. Even with those standards, however, making MIDI work could be a major task. Over a number of years the components of the typical sound card and MIDI interface (including the joystick port) became standard on the motherboard of most PCs, but this did not make configuring them any easier.

Serial, Parallel, and Joystick Ports

Before USB and FireWire, personal computers were all generally equipped with serial, parallel, and (possibly) joystick ports, all of which have been used for connecting MIDI-equipped instruments (through special adapters). Though not always faster than MIDI-DIN, these connectors were already available on computers and that made them an economical alternative to add-on cards, with the added benefit that in general they already worked and did not need special configuration. The High Speed Serial Ports such as the “mini-DIN” ports available on early Macintosh computers support communication speeds roughly 20 times faster than MIDI-DIN, making it also possible for companies to develop and market “multiport” MIDI interfaces that allowed connecting multiple MIDI-DINs to one computer. In this manner it became possible to have the computer address many different MIDI-equipped devices at the same time. Recent multi-port MIDI interfaces use even faster USB or FireWire ports to connect to the computer.

Tutorial: MIDI and Music Synthesis

An explanation of music synthesis technology and how MIDI is used to generate and control sounds.

This document was originally published in 1995 at a time when MIDI had been used in electronic musical instruments for more than a

About MIDI-Part 4:MIDI Files

Standard MIDI Files (“SMF” or *.mid files)

Standard MIDI Files (“SMF” or *.mid files) are a popular source of music on the web, and for musicians performing in clubs who need a little extra accompaniment. The files contain all the MIDI instructions for notes, volumes, sounds, and even effects. The files are loaded into some form of ‘player’ (software or hardware), and the final sound is then produced by a sound-engine that is connected to or that forms part of the player.

One reason for the popularity of MIDI files is that, unlike digital audio files (.wav, .aiff, etc.) or even compact discs or cassettes, a MIDI file does not need to capture and store actual sounds. Instead, the MIDI file can be just a list of events which describe the specific steps that a soundcard or other playback device must take to generate ceratin sounds. This way, MIDI files are very much smaller than digital audio files, and the events are also editable, allowing the music to be rearranged, edited, even composed interactively, if desired.

All popular computer platforms can play MIDI files (*.mid) and there are thousands of web sites offering files for sale or even for free. Anyone can make a MIDI file using commercial (or free) software that is readily available, and many people do, with a wide variety of results.

Whether or not you like a specific MIDI file can depend on how well it was created, and how accurately your synthesizer plays the file… not all synthesizers are the same, and unless yours is similar to that of the file composer, what you hear may not be at all what he or she intended. General MIDI (GM) and GM2 both help address the issue of predictable playback from MIDI Files.

Formats

The Standard MIDI File format is different from native MIDI protocol, because the events are time-stamped for playback in the proper sequence.

Standard MIDI Files come in two basic varieties: a Type 1 file, and a Type 0 file (a Type 2 was also specified originally but never really caught on, so we won’t spend any time discussing it here). In a Type 1 file individual parts are saved on different tracks within the sequence. In a Type 0 file everything is merged into a single track.

Making SMFs

Musical performances are not usually created as SMFs; rather a composition is recorded using a sequencer such as Digital Performer, Cubase, Sonar etc. that saves MIDI data in it’s own format. However, most if not all sequencers a ‘Save As’ or ‘Export’ as a Standard MIDI File.

Compositions in SMF format can be created and played back using most DAW software (Cubase, Logic, Sonar, Performer, FL Studio, Ableton Live, GarageBand ( Type 1 SMF), and other MIDI software applications. Many hardware products (digital pianos, synths and workstations) can also create and playback SMF files. Check the manual of the MIDI products you own to find out about their SMF capabilities.

Setup Data

An SMF not only contains regular MIDI performance data – Channelized notes, lengths, pitch bend data etc – it also should have data (commonly referred to as a ‘header’) that contains additional set-up data (tempo, instrument selections per Channel, controller settings, etc.) as well as songinformation (copyright notices, composer, etc.).

How good, or true to its originally created state an SMF will sound can depend a lot on the header information. The header can exert control over the mix, effects, and even sound editing parameters in order to minimize inherent differences between one soundset and another. There is no standard set of data that you have to put in a header (indeed such data can also be placed in a spare ‘set-up’ bar in the body of the file itself) but generally speaking the more information you provide for the receiving sound device the more defined – and so, presumably, the more to your tastes – the results will be.

Depending upon the application you are using to create the file in the first place, header information may automatically be saved from within parameters set in the application, or may need to be manually placed in a ‘set-up’ bar before the music data commences.

Information that should be considered (per MIDI Channel) includes:

- Bank Select (0=GM) / Program Change #

- Reset All Controllers (not all devices may recognize this command so you may prefer to zero out or reset individual controllers)

- Initial Volume (CC7) (standard level = 100)

- Expression (CC11) (initial level set to 127)

- Hold pedal (0 = off)

- Pan (Center = 64)

- Modulation (0)

- Pitch bend range

- Reverb (0 = off)

- Chorus level (0 = off)

All files should also begin with a GM/GS/XG Reset message (if appropriate) and any other System Exclusive data that might be necessary to setup the target synthesizer. If RPNs or more detailed controller messages are being employed in the file these should also be reset or normalized in the header.

If you are inputting header data yourself it is advisable not to clump all such information together but rather space it out in intervals of 5-10 ticks. Certainly if a file is designed to be looped, having too much data play simultaneously will cause most playback devices to ‘choke, ‘ and throw off your timing.

Download the MIDI 1.0 specification that includes Standard MIDI File details

The Complete MIDI 1.0 Detailed Specification

About MIDI-Part 3:MIDI Messages

Part 3: MIDI Messages

The MIDI Message specification (or “MIDI Protocol”) is probably the most important part of MIDI.

MIDI is a music description language in digital (binary) form. It was designed for use with keyboard-based musical instruments, so the message structure is oriented to performance events, such as picking a note and then striking it, or setting typical parameters available on electronic keyboards. For example, to sound a note in MIDI you send a “Note On” message, and then assign that note a “velocity”, which determines how loud it plays relative to other notes. You can also adjust the overall loudness of all the notes with a Channel Volume” message. Other MIDI messages include selecting which instrument sounds to use, stereo panning, and more.

The first specification (1983) did not define every possible “word” that can be spoken in MIDI , nor did it define every musical instruction that might be desired in an electronic performance. So over the past 20 or more years, companies have enhanced the original MIDI specification by defining additional performance control messages, and creating companion specifications which include:

- MIDI Machine Control

- MIDI Show Control

- MIDI Time Code

- General MIDI

- Downloadable Sounds

- Scalable Polyphony MIDI

Alternate Applications

MIDI Machine Control and MIDI Show Control are interesting extensions because instead of addressing musical instruments they address studio recording equipment (tape decks etc) and theatrical control (lights, smoke machines, etc.).

MIDI is also being used for control of devices where standard messages have not been defined by MMA, such as with audio mixing console automation.

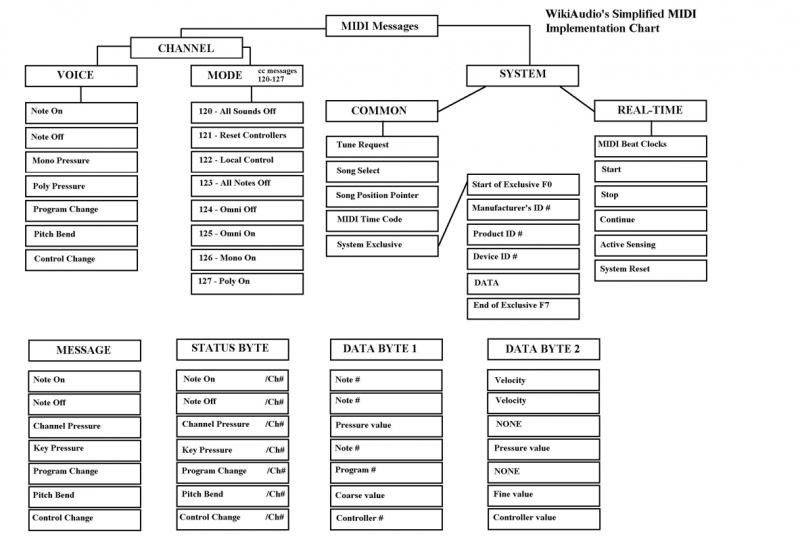

Different Kinds of MIDI Messages

A MIDI message is made up of an eight-bit status byte which is generally followed by one or two data bytes. There are a number of different types of MIDI messages. At the highest level, MIDI messages are classified as being either Channel Messages or System Messages. Channel messages are those which apply to a specific Channel, and the Channel number is included in the status byte for these messages. System messages are not Channel specific, and no Channel number is indicated in their status bytes.

Channel Messages may be further classified as being either Channel Voice Messages, or Mode Messages. Channel Voice Messages carry musical performance data, and these messages comprise most of the traffic in a typical MIDI data stream. Channel Mode messages affect the way a receiving instrument will respond to the Channel Voice messages.

Channel Voice Messages

Channel Voice Messages are used to send musical performance information. The messages in this category are the Note On, Note Off, Polyphonic Key Pressure, Channel Pressure, Pitch Bend Change, Program Change, and the Control Change messages.

Note On / Note Off / Velocity

In MIDI systems, the activation of a particular note and the release of the same note are considered as two separate events. When a key is pressed on a MIDI keyboard instrument or MIDI keyboard controller, the keyboard sends a Note On message on the MIDI OUT port. The keyboard may be set to transmit on any one of the sixteen logical MIDI channels, and the status byte for the Note On message will indicate the selected Channel number. The Note On status byte is followed by two data bytes, which specify key number (indicating which key was pressed) and velocity (how hard the key was pressed).

The key number is used in the receiving synthesizer to select which note should be played, and the velocity is normally used to control the amplitude of the note. When the key is released, the keyboard instrument or controller will send a Note Off message. The Note Off message also includes data bytes for the key number and for the velocity with which the key was released. The Note Off velocity information is normally ignored.

Aftertouch

Some MIDI keyboard instruments have the ability to sense the amount of pressure which is being applied to the keys while they are depressed. This pressure information, commonly called “aftertouch”, may be used to control some aspects of the sound produced by the synthesizer (vibrato, for example). If the keyboard has a pressure sensor for each key, then the resulting “polyphonic aftertouch” information would be sent in the form of Polyphonic Key Pressure messages. These messages include separate data bytes for key number and pressure amount. It is currently more common for keyboard instruments to sense only a single pressure level for the entire keyboard. This “Channel aftertouch” information is sent using the Channel Pressure message, which needs only one data byte to specify the pressure value.

Pitch Bend

The Pitch Bend Change message is normally sent from a keyboard instrument in response to changes in position of the pitch bend wheel. The pitch bend information is used to modify the pitch of sounds being played on a given Channel. The Pitch Bend message includes two data bytes to specify the pitch bend value. Two bytes are required to allow fine enough resolution to make pitch changes resulting from movement of the pitch bend wheel seem to occur in a continuous manner rather than in steps.

Program Change

The Program Change message is used to specify the type of instrument which should be used to play sounds on a given Channel. This message needs only one data byte which specifies the new program number.

Control Change

MIDI Control Change messages are used to control a wide variety of functions in a synthesizer. Control Change messages, like other MIDI Channel messages, should only affect the Channel number indicated in the status byte. The Control Change status byte is followed by one data byte indicating the “controller number”, and a second byte which specifies the “control value”. The controller number identifies which function of the synthesizer is to be controlled by the message. A complete list of assigned controllers is found in the MIDI 1.0 Detailed Specification.

– Bank Select

Controller number zero (with 32 as the LSB) is defined as the bank select. The bank select function is used in some synthesizers in conjunction with the MIDI Program Change message to expand the number of different instrument sounds which may be specified (the Program Change message alone allows selection of one of 128 possible program numbers). The additional sounds are selected by preceding the Program Change message with a Control Change message which specifies a new value for Controller zero and Controller 32, allowing 16,384 banks of 128 sound each.

Since the MIDI specification does not describe the manner in which a synthesizer’s banks are to be mapped to Bank Select messages, there is no standard way for a Bank Select message to select a specific synthesizer bank. Some manufacturers, such as Roland (with “GS”) and Yamaha (with “XG”) , have adopted their own practices to assure some standardization within their own product lines.

– RPN / NRPN

Controller number 6 (Data Entry), in conjunction with Controller numbers 96 (Data Increment), 97 (Data Decrement), 98 (Non-Registered Parameter Number LSB), 99 (Non-Registered Parameter Number MSB), 100 (Registered Parameter Number LSB), and 101 (Registered Parameter Number MSB), extend the number of controllers available via MIDI. Parameter data is transferred by first selecting the parameter number to be edited using controllers 98 and 99 or 100 and 101, and then adjusting the data value for that parameter using controller number 6, 96, or 97.

RPN and NRPN are typically used to send parameter data to a synthesizer in order to edit sound patches or other data. Registered parameters are those which have been assigned some particular function by the MIDI Manufacturers Association (MMA) and the Japan MIDI Standards Committee (JMSC). For example, there are Registered Parameter numbers assigned to control pitch bend sensitivity and master tuning for a synthesizer. Non-Registered parameters have not been assigned specific functions, and may be used for different functions by different manufacturers. Here again, Roland and Yamaha, among others, have adopted their own practices to assure some standardization.

Channel Mode Messages

Channel Mode messages (MIDI controller numbers 121 through 127) affect the way a synthesizer responds to MIDI data. Controller number 121 is used to reset all controllers. Controller number 122 is used to enable or disable Local Control (In a MIDI synthesizer which has it’s own keyboard, the functions of the keyboard controller and the synthesizer can be isolated by turning Local Control off). Controller numbers 124 through 127 are used to select between Omni Mode On or Off, and to select between the Mono Mode or Poly Mode of operation.

When Omni mode is On, the synthesizer will respond to incoming MIDI data on all channels. When Omni mode is Off, the synthesizer will only respond to MIDI messages on one Channel. When Poly mode is selected, incoming Note On messages are played polyphonically. This means that when multiple Note On messages are received, each note is assigned its own voice (subject to the number of voices available in the synthesizer). The result is that multiple notes are played at the same time. When Mono mode is selected, a single voice is assigned per MIDI Channel. This means that only one note can be played on a given Channel at a given time.

Most modern MIDI synthesizers will default to Omni On/Poly mode of operation. In this mode, the synthesizer will play note messages received on any MIDI Channel, and notes received on each Channel are played polyphonically. In the Omni Off/Poly mode of operation, the synthesizer will receive on a single Channel and play the notes received on this Channel polyphonically. This mode could be useful when several synthesizers are daisy-chained using MIDI THRU. In this case each synthesizer in the chain can be set to play one part (the MIDI data on one Channel), and ignore the information related to the other parts.

Note that a MIDI instrument has one MIDI Channel which is designated as its “Basic Channel”. The Basic Channel assignment may be hard-wired, or it may be selectable. Mode messages can only be received by an instrument on the Basic Channel.

System Messages

MIDI System Messages are classified as being System Common Messages, System Real Time Messages, or System Exclusive Messages. System Common messages are intended for all receivers in the system. System Real Time messages are used for synchronization between clock-based MIDI components. System Exclusive messages include a Manufacturer’s Identification (ID) code, and are used to transfer any number of data bytes in a format specified by the referenced manufacturer.

System Common Messages

The System Common Messages which are currently defined include MTC Quarter Frame, Song Select, Song Position Pointer, Tune Request, and End Of Exclusive (EOX). The MTC Quarter Frame message is part of the MIDI Time Code information used for synchronization of MIDI equipment and other equipment, such as audio or video tape machines.

The Song Select message is used with MIDI equipment, such as sequencers or drum machines, which can store and recall a number of different songs. The Song Position Pointer is used to set a sequencer to start playback of a song at some point other than at the beginning. The Song Position Pointer value is related to the number of MIDI clocks which would have elapsed between the beginning of the song and the desired point in the song. This message can only be used with equipment which recognizes MIDI System Real Time Messages (MIDI Sync).

The Tune Request message is generally used to request an analog synthesizer to retune its’ internal oscillators. This message is generally not needed with digital synthesizers.

The EOX message is used to flag the end of a System Exclusive message, which can include a variable number of data bytes.

System Real Time Messages

The MIDI System Real Time messages are used to synchronize all of the MIDI clock-based equipment within a system, such as sequencers and drum machines. Most of the System Real Time messages are normally ignored by keyboard instruments and synthesizers. To help ensure accurate timing, System Real Time messages are given priority over other messages, and these single-byte messages may occur anywhere in the data stream (a Real Time message may appear between the status byte and data byte of some other MIDI message).

The System Real Time messages are the Timing Clock, Start, Continue, Stop, Active Sensing, and the System Reset message. The Timing Clock message is the master clock which sets the tempo for playback of a sequence. The Timing Clock message is sent 24 times per quarter note. The Start, Continue, and Stop messages are used to control playback of the sequence.

The Active Sensing signal is used to help eliminate “stuck notes” which may occur if a MIDI cable is disconnected during playback of a MIDI sequence. Without Active Sensing, if a cable is disconnected during playback, then some notes may be left playing indefinitely because they have been activated by a Note On message, but the corresponding Note Off message will never be received.

The System Reset message, as the name implies, is used to reset and initialize any equipment which receives the message. This message is generally not sent automatically by transmitting devices, and must be initiated manually by a user.

System Exclusive Messages

System Exclusive messages may be used to send data such as patch parameters or sample data between MIDI devices. Manufacturers of MIDI equipment may define their own formats for System Exclusive data. Manufacturers are granted unique identification (ID) numbers by the MMA or the JMSC, and the manufacturer ID number is included as part of the System Exclusive message. The manufacturers ID is followed by any number of data bytes, and the data transmission is terminated with the EOX message. Manufacturers are required to publish the details of their System Exclusive data formats, and other manufacturers may freely utilize these formats, provided that they do not alter or utilize the format in a way which conflicts with the original manufacturers specifications.

Certain System Exclusive ID numbers are reserved for special protocols. Among these are the MIDI Sample Dump Standard, which is a System Exclusive data format defined in the MIDI specification for the transmission of sample data between MIDI devices, as well as MIDI Show Control and MIDI Machine Control.