KnittedKeyboard: A multi-modal, textile-based soft MIDI controller

KnittedKeyboard is a recent project coming out from the Responsive Environments Group at the MIT Media Lab. This instrument provides new interactions and tactile experiences for musical expressions, and it can be easily worn, folded, rolled up, and packed in our luggage like a pair of socks or a scarf. The project was initially motivated by discussions with Lyle Mays, a late composer and jazz pianist in the Pat Metheny Group, who wished a rollable fabric keyboard for composing and performing music on the road.

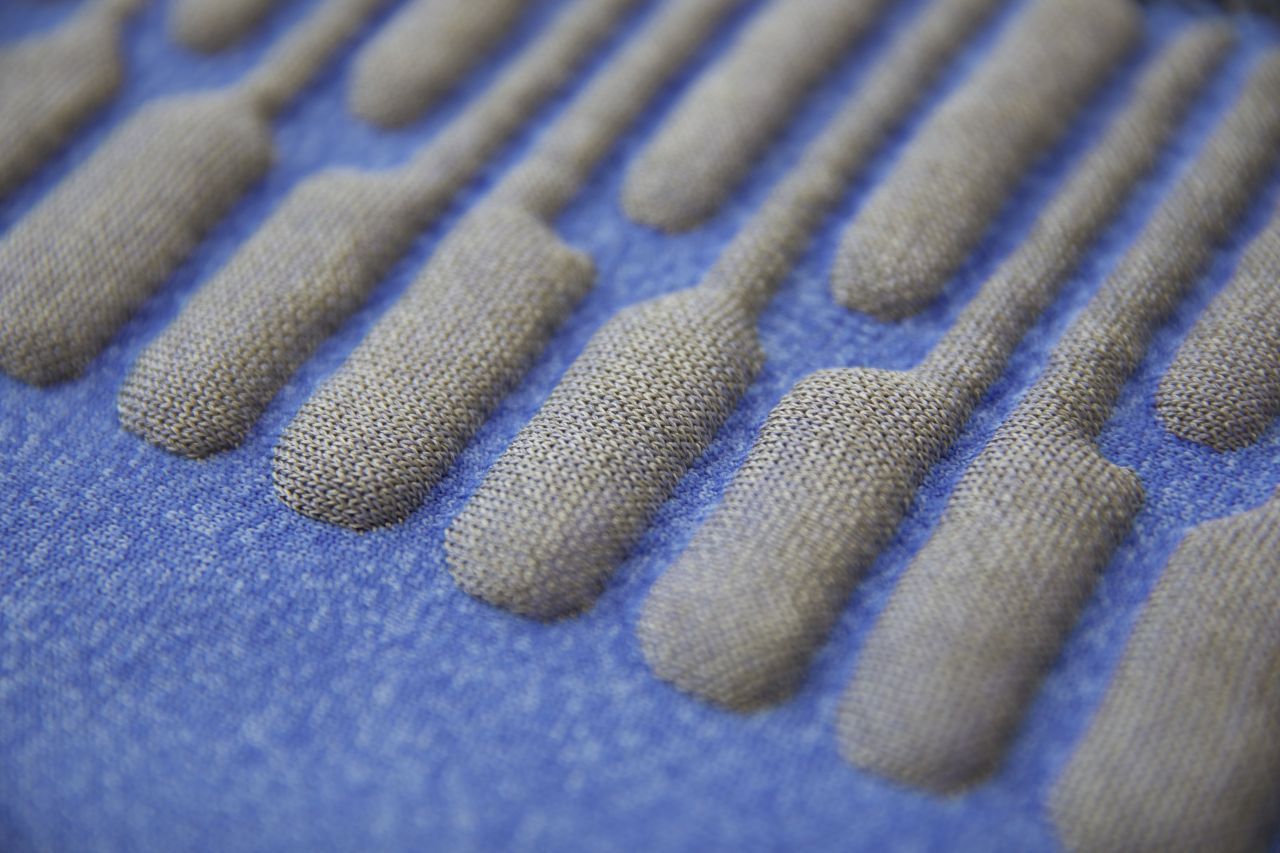

The KnittedKeyboard leverages digital machine knitting of functional (electrically-conductive and thermoplastic) and non-functional (polyester) fibers to develop a seamless and customized, 5-octave piano-patterned musical textile. The individual and combinations of the keys could simultaneously sense touch, as well as continuous proximity, stretch, and pressure. It ultimately combines both discrete controls from the conventional keystrokes and expressive continuous controls from the non-contact, theremin-inspired proximity sensors (by waving and hovering on the air), as well as physical interactions enabled by the knitted fabric sensors (e.g. squeezing, pulling, stretching, and twisting). The KnittedKaybord enables performers to experience intimate, organic tactile experience as they explore the seamless texture and materiality of the electronic textile.

The sensing mechanism is based on capacitive and piezo-resistive sensing. Every key acts as an electrode and is sequentially charged and discharged. This creates an electromagnetic field that can be disrupted by hand’s approach, enabling us to detect not only contact touch, but also non-contact proxemic gesture such as hovering or waving on the air, contact touch, as well as to calculate strike velocity. The piezo-resistive layers underneath can measure pressure and stretch exerted on the knitted keyboard. All of the sensor data is converted to musical instrument digital interface (MIDI) messages by a central microprocessor, which will correspond to certain timbral, dynamic, and temporal variations (filter resonance, frequency, glide, reverb, amp, distortion, et cetera), as well as pitch-bend. Audio sequencing and generation software such as Ableton Live and Max/MSP map these MIDI messages to their corresponding channels, controls, notes, and effects.

“Fabric of Time and Space”, as demonstrated in below video, is a contemporary musical piece exclusively written for KnittedKeyboard to demonstrate and illustrate the multi-dimensional expressiveness of the instrument. The piece is a metaphor for the expanding and contracting nature of the universe and this is represented musically by the glissandi of the melody as well as the interplay between major and minor chords. The metaphorical perturbations of space-time were expressed by the interaction between the performer and the fabric. The musical translation of these expressions were used to shape the envelope of the sound. The underlining technology would enable further exploration of soft and malleable gestural musical interfaces that leverage the unique mechanical structures of the materials, as well as the intrinsic electrical properties of the knitted sensors. For further information about this project, please access its main page.

KnittedKeyboard II from MIT Media Lab on Vimeo.